In this post I will be going through the process of setting up an Angular front end to connect and utilize some of the TensorFlow models that were set up in previous posts. The model set up and training walk through can be found here and the docker serving walk through here. This post is part of the TensorFlow + Docker MNIST Classifier series.

If you are not familiar with Angular I highly recommend at least going through the official getting started tutorial before implementing any of the code below. Or you can use your own front end instead of Angular.

This is not an Angular tutorial and I will not be going through the code in detail. We will be cloning a project from my git repository and going through some of the key components that are specific to this project. To keep things organized we will be running our Angular application within a docker container.

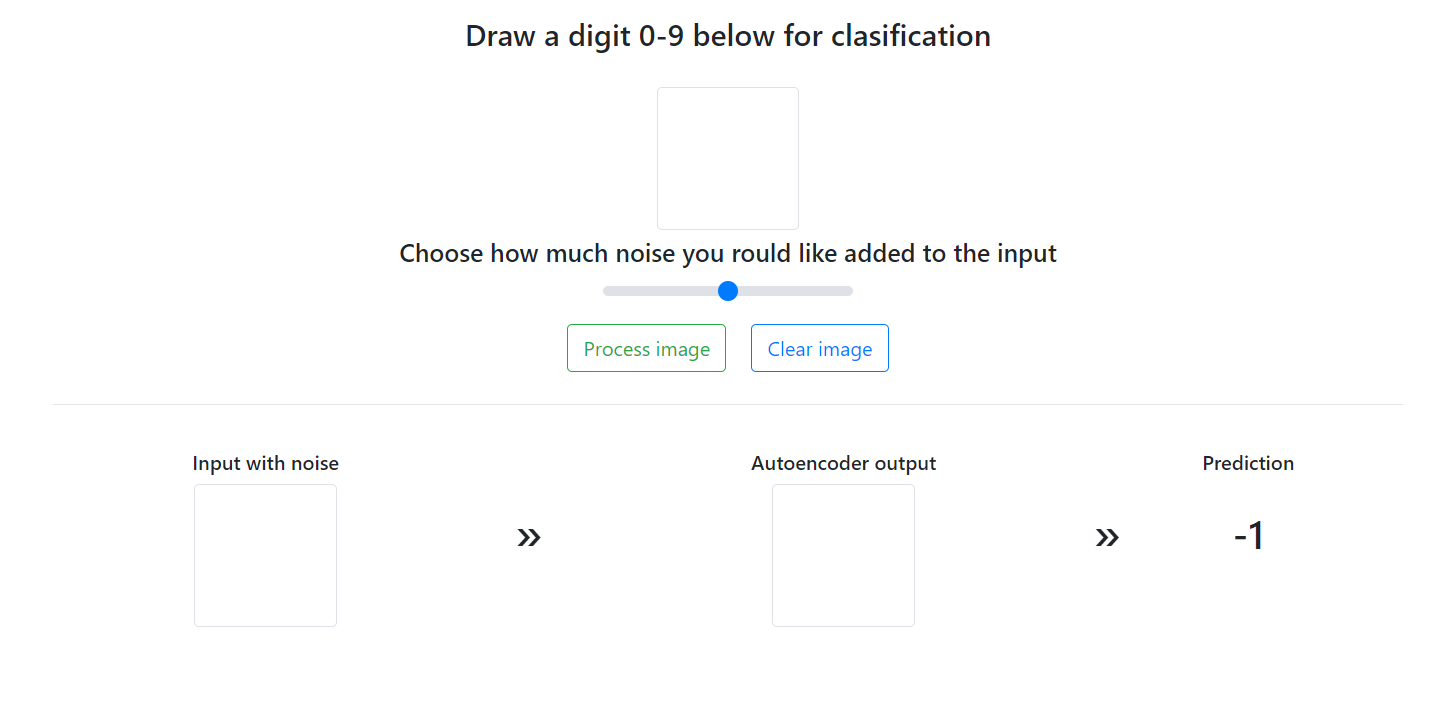

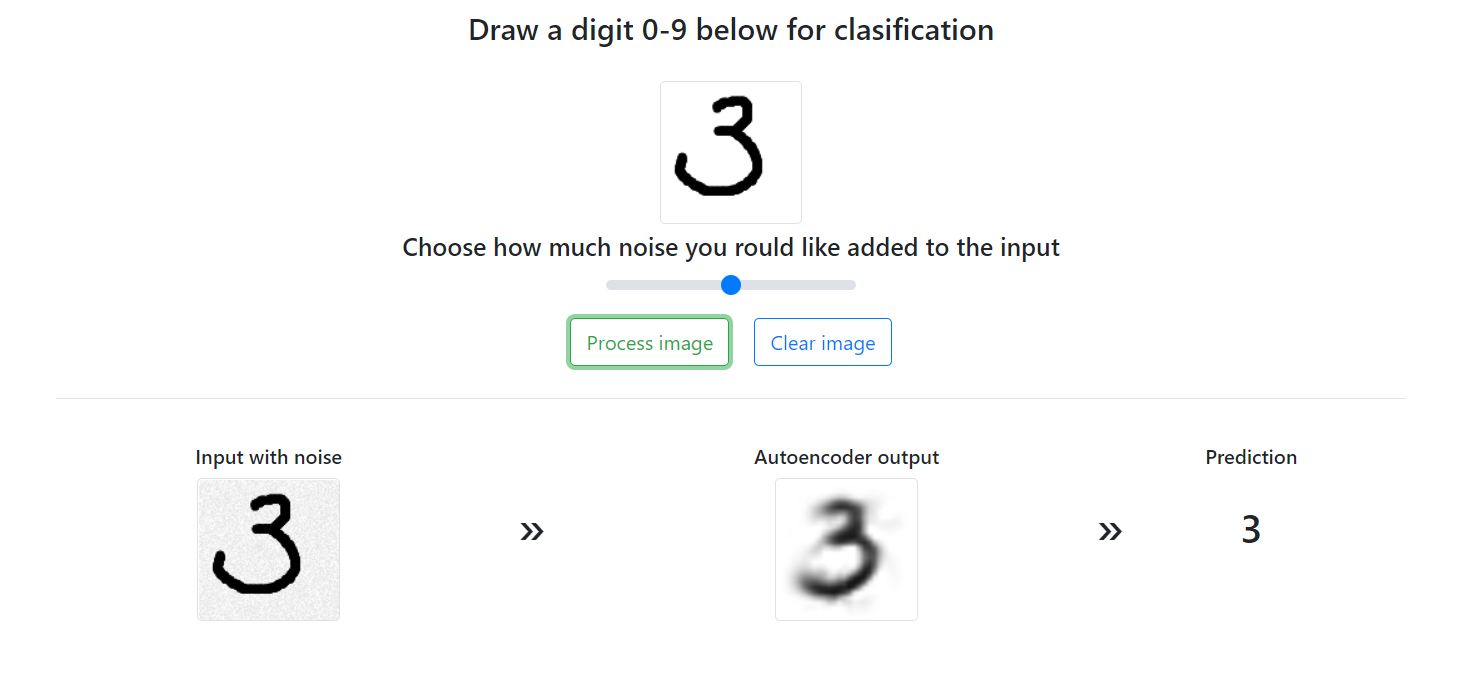

For reference, here is the final result we are targeting.

The setup

If you are using Docker on windows you might need to share your Drives. This can be done by navigating to Docker settings > Shared Drives and making sure the drives you are working with are checked

We can start by cloning my Angular repository:

$ git clone https://github.com/adidinchuk/angular-mnist-project

The app folder structure should look like this:

├── src

│ ├── app

│ │ ├── components

│ │ │ ├── digits

│ │ │ │ ├── canvas

│ │ │ │ ├── digit-control

│ │ │ │ ├── prediction

│ │ │ │ │.......

│ │ ├── services

│ │ │ ├── digits

│ │ │ │ ├── api

│ │ │.......

│ ├── assets

│ │ ├── css

│ │ ├── js

│ ├── environments

│ │ │.......

├── proxy.config.json

│.......

Some key objects and their responsibilities:

src/app/components/digits/canvas

Canvas object used for several things - primarely for getting user digit input, image scaling and displaying autoencoder results

src/app/components/digits/digit-control

Main component responsible for collecting user input (both noise option and digit)

src/app/components/digits/prediction

Very basic component responsible for displaying the classifier results

src/app/services/digits/api

Services used for communication with the TF serving instance

Let's take a closer look at how we access our TF serving endpoints. Here is what our main service methods look like:

//Autoencoder endpoint to clean data before classification processing

runAutoencoder(data): Observable<any> {

return this.http.post(DigitsConfig.API_ENDPOINT_PROXY + DigitsConfig.AUTOENCODER_MODEL + ':' + DigitsConfig.TF_METHOD_NAME, {

"instances": [data]

})

}

//classification endpoint to classify a 784 vector into a 0-9 digit

runClassification(data): Observable<any> {

return this.http.post(DigitsConfig.API_ENDPOINT_PROXY + DigitsConfig.CLASSIFICATION_MODEL + ':' + DigitsConfig.TF_METHOD_NAME, {

"instances": [data]

})

}

And the configuration attributes:

public static API_ENDPOINT_PROXY = '/v1/models/';

public static AUTOENCODER_MODEL = 'autoencoder';

public static CLASSIFICATION_MODEL = 'classifier';

public static TF_METHOD_NAME = 'predict';

public static TF_INPUT_PARAM_NAME = 'instances';

Note that we are using a proxy for the mapping to ensure that any networking changes can be made outside the source code. For this we have a proxy.config.json file in the root directory with the following content:

{

"/v1/*": {

"target": "http://SERVING:8501",

"secure": false,

"logLevel": "debug",

"changeOrigin": true

}

}

Bellow I will be covering exactly what SERVING means and how we force the Angular application to adhere to this proxy. But in the mean time from our components we can leverage these endpoints using something like this:

this.api.runClassification(data).subscribe(

class_res => {

this.prediction.digit = this.extractPrediction(class_res.predictions[0]);

},

class_err => {

console.log("Error occured during the classification call.");

})

Fairly straightforward, in fact due to my lacking front end knowledge the majority of my time went towards figuring out how to use canvases correctly to collect user input and display the final result. The TF serving integration was the easy part. I am certain there are many ways my components could be improved, feel free to clone my repository and go nuts.

Running the docker container

Before building and launching the docker instance let's take a quick look at the Dockerfile, you will notice that the application on start sets up the proxy.config.json file using that we took a look at above using --proxy-config proxy.config.json.

# base image

FROM node:8.15.0

# install chrome for protractor tests

RUN wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | apt-key add -

RUN sh -c 'echo "deb [arch=amd64] http://dl.google.com/linux/chrome/deb/ stable main" >> /etc/apt/sources.list.d/google.list'

RUN apt-get update && apt-get install -yq google-chrome-stable

# set working directory

WORKDIR /app

# add `/app/node_modules/.bin` to $PATH

ENV PATH /app/node_modules/.bin:$PATH

# install and cache app dependencies

COPY package.json /app/package.json

RUN npm install

# add app

COPY . /app

# start app

CMD ng serve --host 0.0.0.0 --proxy-config proxy.config.json

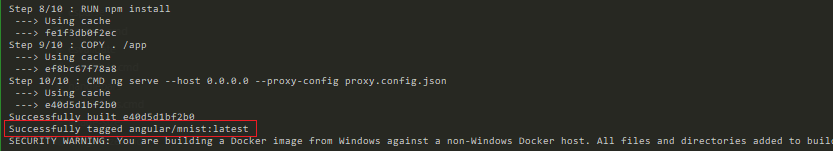

Before we run the container we need to build the image and make sure all dependencies are retrieved. We can do this using:

$ docker build -t angular/mnist .\angular-mnist-project

Depending on your network's performance this could take a few minutes as all required dependencies will be retrieved. Notice that we tagged the image angular/mnist, you can also see this in the output:

Now that we have our image we can run the docker container using:

$ docker run --rm -p 4201:4200 --name angular angular/mnist

Breaking the command down:

--rm

make sure the container is automatically cleaned up on exit

-p 4201:4200

map the docker internal port 4200 to the external port 4201

--name angular

serving give a name to our container for easier identification and termination

angular/mnist

assosiate the image we built above

After running the command if you open http://localhost:4201 in your browser you should see the running application.

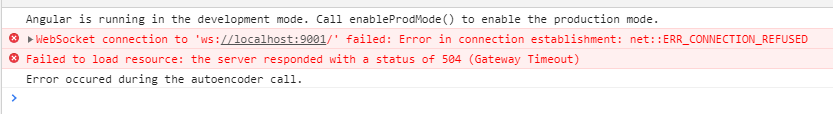

However if you try submitting the form you will see that nothing happens and taking a look at the console logs you can see that the TF serving endpoint is timing out.

This is happening because the angular docker container and the TF serving docker container are isolated. While from from your machine you can see both localhost:4201 and localhost:8501, the images are not able to resolve these paths. The solution is to use docker network functionality. We do this by first creating a docker network and then updating our docker start commands to first associate the containers with the network and then associate unique aliases to them. This aliases will allow containers to easily reference each other.

Create a new docker network

$ docker network create MNIST

Remember to kill and remove any containers that are still active before running

$ docker kill angular

$ docker rm angular

$ docker kill serving

$ docker rm serving

Update and run the Angular docker run scripts

$ docker run --net MNIST --net-alias=ANGULAR --rm -p 4201:4200 --name angular angular/mnist

Update and run the TF Serving docker run scripts (original)

$ set TF_MODEL_DIR=%cd%/serving/mnist

$ docker run --net MNIST --net-alias=SERVING --rm -p 8501:8501 --name serving --mount type=bind,source=%TF_MODEL_DIR%/classifier,target=/models/classifier --mount type=bind,source=%TF_MODEL_DIR%/autoencoder,target=/models/autoencoder --mount type=bind,source=%TF_MODEL_DIR%/config,target=/config tensorflow/serving --model_config_file=/config/models.config

Associating the alias SERVING with --net-alias=SERVING to the TF Serving container allows the Angular application to access the endpoints at SERVING:8501 as both are running on the MNIST network.

With this done the http://localhost:4201 application should now work as expected:

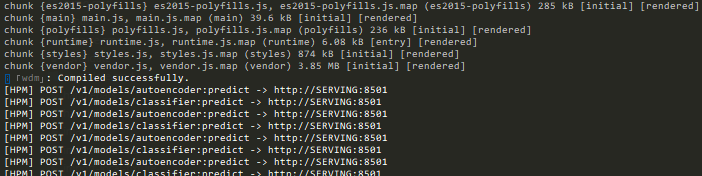

If you check the terminal where the Angular docker container is running (or docker angular logs) you can see that requests are being correctly forwarded to http://SERVING:8501:

That's it! Hope this was helpful to somebody out there, I know I learned a low implementing and documenting this project.

Here is a summary of the components involved in this project:

| Section | Git Repository |

|---|---|

| Introduction | N/A |

| The Models | tf-mnist-project |

| Serving Models | tf-serving-mnist-project |

| The User Interface | angular-mnist-project |